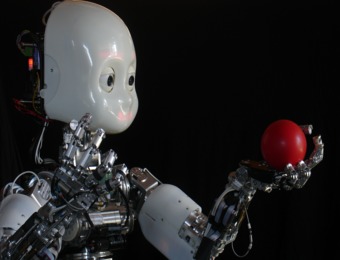

For achieving social interaction between human and robots, it would be essential to develop cognitive architectures which can achieve dynamic coordination among multiple cognitive processes including action generation, dynamic visual recognition and attention control in a context sensitive manner. For this purpose, my group has developed series of self-organizing neurodynamic models that are used for humanoid robots for a decade. First, we developed so-called the multiple timescales recurrent neural network (MTRNN) in which temporal hierarchy for action generation can be self-organized by applying multiple scales of temporal constraints on neural activity in the model. Second, we developed so-called the multiple spatio-temporal neural network model (MSTNN) in which spatio-temporal hierarchy for dynamic vision recognition can be self-organized by applying multiple scales of spatio-temporal constraints in the neural activity. In the third stage, these two models were integrated into so-called the Visuo-Motor Deep Dynamic Neural Network (VMDNN) for the purpose of enabling humanoid robots to acquire skills for goal-directed actions while interacting with human. My talk will highlight on the explanation of how contextual “synergetic” coordination among vision, motor, and attention processes can emerge via deep and consolidative learning in our proposed VMDNN.

Full Day Workshop (in Conjunction with Humanoids 2015 - South Korea - November 3, 2015)